Ethics and the problem of bias in computational linguistics are structural rather than incidental issues. It is not merely a matter of “cleaning the data,” but of critically rethinking the relationship between language, society, and technology. As Bender (2021) points out, language models do not actually understand language; they statisticalize it, thereby risking the amplification of preexisting distortions. Errors and distortions in the diagnostic field raise ethical issues of particular significance. It is necessary to reflect deeply on the bioethical implications of computational diagnoses.Research must be responsible, taking into account not only technical performance but also social, environmental, and political effects. Large language models are described as “stochastic parrots” because: they do not truly understand language.They repeat and recombine, in a statistical manner, what they have learned from data. They can generate plausible text, yet devoid of genuine semantic understanding. This last point is particularly relevant when dealing with a diagnostic process that requires the understanding not only of meaning but also of the implicit sense conveyed in verbal language—a sense that cannot be reduced to statistical formulas or numbers. Moreover the physician makes a diagnosis in psychiatry with a view to treatment: in posts and on social media, which are analyzed for diagnostic purposes, is a request for care actually being expressed? Diagnosis can become an intrusion into private life, an insolecited stigmatization experienced as a form of violence. “Psychiatric surveillance” through algorithms that read continously behaviors or texts on social media to assign labels without human contact is a practice that must be avoided or subjected to specific procedures and limitations related to the patient’s explicit consent.

Ultimately, the diagnosis and, consequently, the treatment are always formulated by the physician, who bears the legal responsibility for the medical decision. Technical tools—such as laboratory tests, radiographic examinations, CT scans, or MRI—are helpful in assessing the clinical situation, but they can in no way replace human evaluation and interpretation. The same applies to AI, which in psychiatry may be regarded as a cognitive prosthesis, including psychometrics and the analysis of language, but it cannot replace human judgment.

Osgood, C. E., Suci, G. J., & Tannenbaum, P. H. (1957). The measurement of meaning. Urbana: University of Illinois Press.

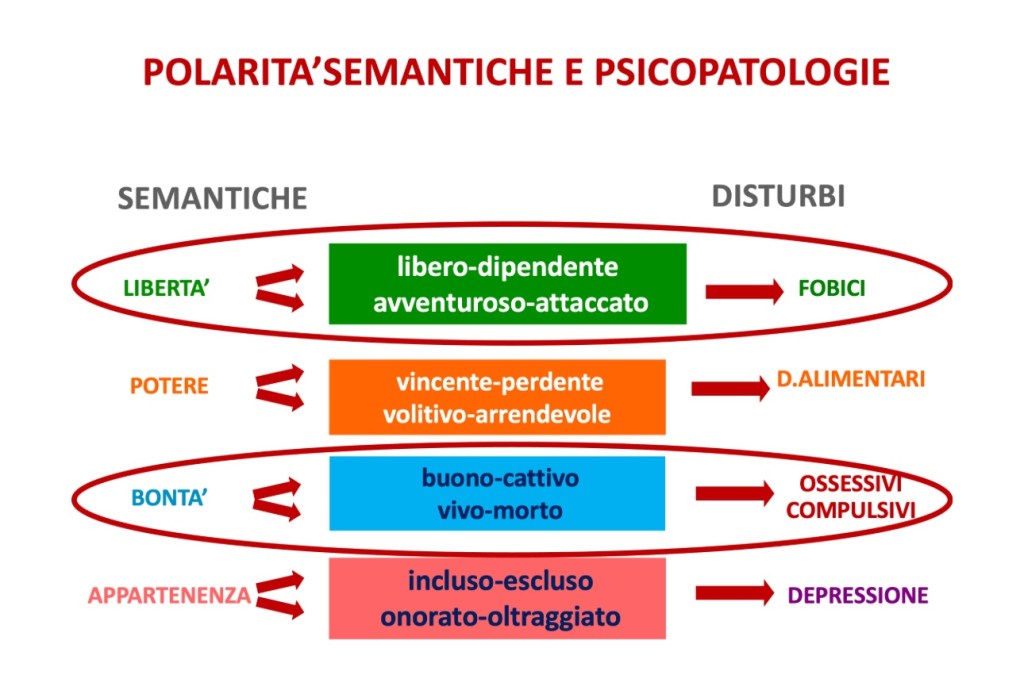

Ugazio, C. (2012). Storie permesse, storie proibite. Polarità semantiche familiari e psicopatologie (2ª ed. ampliata). Milano: Bollati Boringhieri.

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency.

Clark, A., & Chalmers, D. (1998). The extended mind. Analysis, 58(1), 7–19. https://doi.org/10.1093/analys/58.1.7

Pragmatica computazionale

La pragmatica si basa su conoscenze condivise, mondo comune e intenzioni, che sono molto difficili da rappresentare in un sistema computazionale. Per questo, modelli come i grandi LLM (Large Language Models) cercano di simulare la pragmatica statistica, ma non sempre “capiscono” davvero: spesso ricorrono a pattern appresi dai dati.